Some useful tips about Linux commands, programming skills and else. All of the tips are purely taken from the website. I am continuing add these tips to save my bad memory. 😂

Linux Commands

grep

Find all files containing specific text (string) on Linux

grep -rnw '/path/to/somewhere' -e 'pattern'

-ror-Ris recursive,-nis line number, and-wstands for match the whole word.-l(lower-case L) can be added to just give the file name of matching files.-eis the pattern used during the search

Along with these, --exclude, --include, --exclude-dir flags could be used for efficient searching:

- This will only searching through those files which have .c or .h extensions:

grep --include=\*.{c,h} -rnw '/path/to/somewhere/' -e "pattern"

- This will exclude searching all the files ending with .o extension:

grep --exclude=\*.o -rnw '/path/to/somewhere/' -e "pattern"

- For directories it’s possible to exclude one or more directories using the

--exclude-dirparameter. For example, this will exclude the dirsdir1/,dir2/and all of them matching*.dst/:

grep --exclude-dir={dir1,dir2,*.dst} -rnw '/path/to/search/' -e "pattern"

sshpass

If we want to ssh into the serve with a single command line, we can use sshpass. To get command by

sudo apt-get install sshpass

Example:

sshpass -p 'mySSHPasswordHere' ssh username@server.nixcraft.net.in

rsync

Rsync, which stands for remote sync, is a remote and local file synchronization tool. It uses an algorithm to minimize the amount of data copied by only moving the portions of files that have changed.

rsync -azvP source destination

# example

rsync -azvP yanfeit@ipaddress:path/to/folder ./

ssh key login

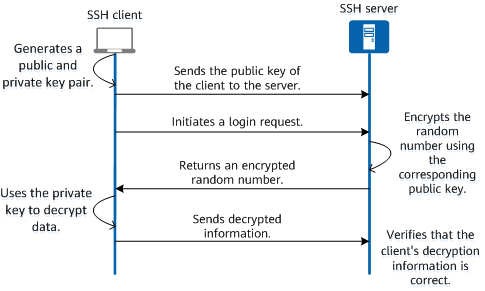

Key authentication is widely used and recommended. The user generates the public key and private key on the client by using some algorithms, e.g., RSA/ED25519/DAS/ECDSA. For the first time, the user sends the public key to the server. When the user logins into the server, the server generates an encrypted random number by the public key. The server sends back the encrypted number. After the client received the encrypted data, it decrypts the data by using the private key. The client returns the decrypted information. At last, the server verifies whether the decrypted information is correct or not.

Fig. The principle of ssh key login

Fig. The principle of ssh key login

Below is an example showing how to use ssh key login method. We can first check whether we already can SSH keys or not.

ls -al ~/.ssh

# Lists the files in your .ssh directory, if they exist

# e.g., id_rsa.pub id_ecdsa.pub id_ed25519.pub

ssh-keygen -t ed25519 -C "your_email@address.com" # here I use ED25519 algorithm to generate the public key and private key. -C means comments

Typically, we can store the keys in the default folder ~/.ssh/. Then the public key is sent to the server.

ssh-copy-id -i ~/.ssh/id_ed25519.pub your_account_name@server1_ip

You will be asked to enter the password to verify the data transmission.

Do this again on the second server.

ssh-copy-id -i ~/.ssh/id_ed25519.pub your_account_name@server2_ip

For Github.com or Gitee.com website, please paste the public key to the SSH keys of setting enviroment.

cat ~/.ssh/id_ed25519.pub

split and cat large files

We can use the split command to split files, which supports both text and binary file splitting, and the cat command to merge files.

When splitting a file by the file size, we need to specify the split file size with the -b argument specifies the size of the split file.

split -b 4096m large_file.tar.gz small_file

We can use the cat command to merge files that are split in the above ways.

cat small_file* > original_file

nohup

We can redirect nohup output to a log file like this,

nohup myprogram > myprogram.out 2> myprogram.err & #

nohup myprogram > myprogram.out 2>&1 & # output the std&err to the same file.

# Don't forget the & at the end.

Sphinx

make html # create html files

cp -r build/html/. /home/nginx/html # move html files to nginx file

Start A Downloading Server

python -m http.server

This will start a server for collegues who want to downloading files.

Programming

Read arguments from the input is useful. Below is a typical method to read arguments in C programming.

for(int i = 0; i < argc; i++) {

if((strcmp(argv[i], "-i") == 0) || (strcmp(argv[i], "--input_file") == 0)) {

input_file = argv[++i];

continue;

}

}

Message Passing Interface

I take the tips from the website mpitutorial.

Basic

MPI_Init(int* argc, char** argv)

During MPI_Init, all of MPI’s global and internal variables are constructed. For example, a communicator is formed around all of the processes that were spawned, and unique ranks are assigned to each process.

MPI_Comm_size(MPI_Comm communicator, int* size)

MPI_Comm_size returns the size of a communicator. For example,

MPI_Comm_size(MPI_COMM_WORLD, &world_size);

MPI_COMM_WORLD encloses all of the processes in the job. Therefore, this call should return the amount of processes that were requested for the job.

MPI_Comm_rank(MPI_Comm communicator, int* rank)

MPI_Comm_rank returns the rank of a process in a communicator. Each process inside of a communicator is assigned an incremental rank starting from zero.

A miscellaneous and less-used function is:

MPI_Get_processor_name(char* name, int* name_length)

MPI_Get_processor_name obtains the actual name of the processor on which the process is executing.

MPI_Finalize()

MPI_Finalize() is used to clean up the MPI environment. No more MPI calls can be made after this one.

Send and Receive

MPI_Send(void* data, int count, MPI_Datatype datatype, int destination, int tag, MPI_Comm communicator)

MPI_Recv(void* data, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm communicator, MPI_Status* status)

The first argument is data buffer. The second and third arguments describes the count and type of elements that reside in the buffer. MPI_Send sends the exact count of elements, and MPI_Recv will receive at most the count of elements. The fourth and fifth arguments specify the rank of the sending/receiving process and the tag of the message. The sixth argument specifies the communicator and the last argument (for MPI_Recv only) provides information about the received message.

MPI_Status and MPI_Probe

The three primary pieces of information from MPI_Status includes:

- The rank of the sender. For example, if we declare an

MPI_Status statvariable, the rank can be accessed withstat.MPI_SOURCE. - The tag of the message. The tag of the message can be accessed by the

MPI_TAGelement of the structure (similar toMPI_SOURCE). - The length of the message.

MPI_Get_count(MPI_Status* status, MPI_Datatype datatype, int* count)

In MPI_Get_count, the user passes the MPI_Status structure, the datatype of the message, and count is returned. The count variable is the total number of datatype elements that were received.

We can use MPI_Probe to query the message size before actually receiving it. The function prototype looks like this.

MPI_Probe(int source, int tag, MPI_Comm comm, MPI_Status* status)

MPI_Probe looks quite similar to MPI_Recv. In fact, you can think of MPI_Probe as an MPI_Recv that does everything but receive the message. Similar to MPI_Recv, MPI_Probe will block for a message with a matching tag and sender. When the message is available, it will fill the status structure with information. The user can then use MPI_Recv to receive the actual message.

Broadcast

MPI_Barrier(MPI_Comm communicator)

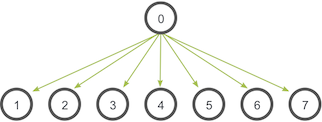

Fig. The communication pattern of a broadcast

Fig. The communication pattern of a broadcast

MPI_Bcast(void* data, int count, MPI_Datatype datatype, int root, MPI_Comm communicator)

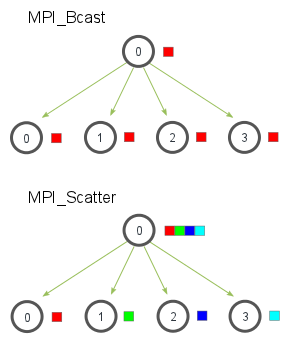

MPI Scatter, Gather, and Allgather

Fig. The communication pattern of a scatter

Fig. The communication pattern of a scatter

MPI_Scatter(void* send_data, int send_count, MPI_Datatype send_datatype, void* recv_data,

int recv_count, MPI_Datatype recv_datatype, int root, MPI_Comm communicator)

The first parameter, send_data, is an array of data that resides on the root process. The second parameter and third parameters, send_count and send_datatype, dictate how many elements of a specific MPI Datatype will be sent to each process. If send_count is one and send_datatype is MPI_INT, then process zero gets the first integer of the array, process one gets the second integer, and so on. If send_count is two, the process zero gets the first and second integers, process one gets the third and fourth, and so on. The recv_data parameter is a buffer of data that can hold recv_count elements that have a datatype of recv_datatype. The last parameter, root and communicator, indicate the root process that is scattering the array of data and the communicator in which the processes reside.

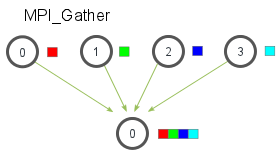

Fig. The communication pattern of a gather

Fig. The communication pattern of a gather

MPI_Gather(void* send_data, int send_count, MPI_Datatype send_datatype,void* recv_data,

int recv_count, MPI_Datatype recv_datatype, int root, MPI_Comm communicator)

In MPI_Gather, only the root process needs to have a valid receive buffer. All other calling processes can pass NULL for recv_data. Also, don’t forget that the recv_count parameter is the count of elements received per process, not the total summation of counts from all processes.

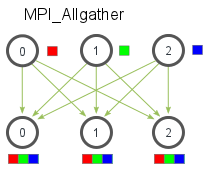

Fig. The communication pattern of a Allgather

Fig. The communication pattern of a Allgather

MPI_Allgather(void* send_data, int send_count, MPI_Datatype send_datatype,

void* recv_data, int recv_count, MPI_Datatype recv_datatype, MPI_Comm communicator)

The function declaration for MPI_Allgather is almost identical to MPI_Gather with the difference that there is no root process in MPI_Allgather.

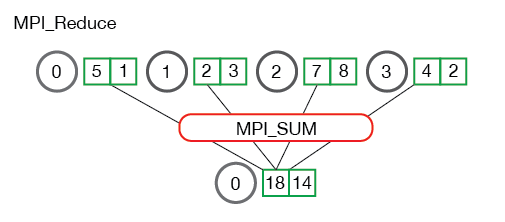

MPI Reduce and Allreduce

Fig. The communication pattern of a reduce

Fig. The communication pattern of a reduce

MPI_Reduce(void* send_data, void* recv_data, int count, MPI_Datatype datatype,

MPI_Op op, int root, MPI_Comm communicator)

The send_data parameter is an array of elements of type datatype that each process wants to reduce. The recv_data is only relevant on the process with a rank of root. The recv_data array contains the reduced result and has a size of sizeof(datatype) * count. The op parameter is the operation that you wish to apply to your data.

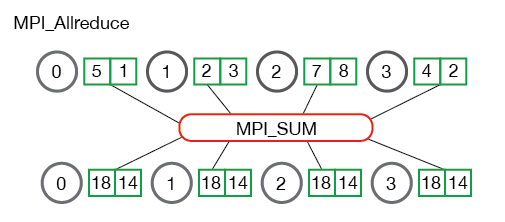

Fig. The communication pattern of a Allreduce

Fig. The communication pattern of a Allreduce

MPI_Allreduce(void* send_data, void* recv_data, int count,

MPI_Datatype datatype, MPI_Op op, MPI_Comm communicator)